The Missing Section of LLM Model Cards

Fixing the flaws of the everything systems

09 Aug 2024 ⋅ Sean McGregor, Nick Judd, Sarah Anoke ⋅ 4 min ⋅ #llm#ai

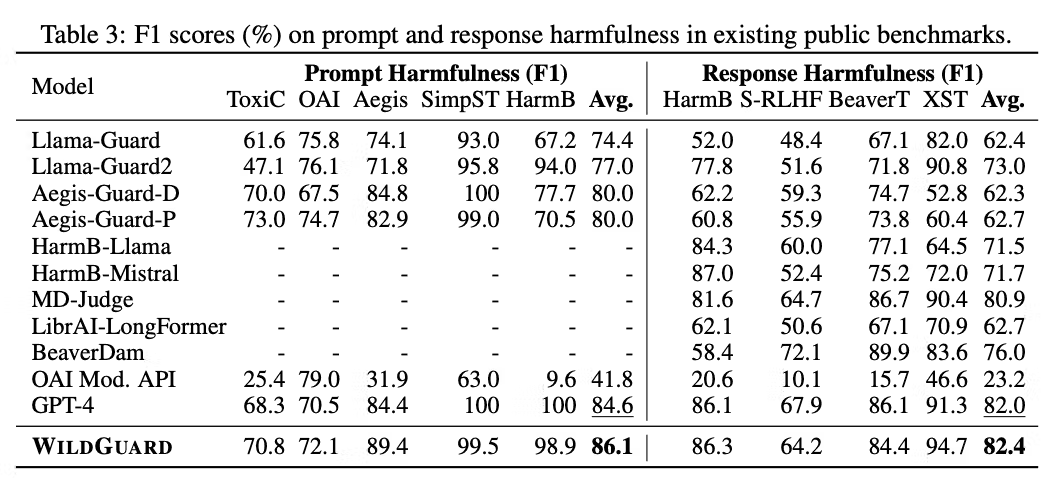

Large Language Models (LLMs) are increasingly integrated into a broad variety of specific products, but LLM safety research today focuses on a general sense of safety. Take for example the safety scoring produced for Allen Institute for AI’s (Ai2’s) WildGuard system, which presents the following comparison across models:

A collection of benchmarks as reported in for the WildGuard system.

A collection of benchmarks as reported in for the WildGuard system.

Table 1. Safety benchmarks reported for WildGuard related to various LLM hazards, such as toxicity and self-harm.

These benchmarks are commonly reported for newly released LLMs and they represent the forefront of LLM safety documentation. Although necessary for safety engineering, these benchmarks are not sufficient documentation for a person making deployment decisions for a general purpose system. For example, can WildGuard be relied upon to replace human moderators of English social network content in South Africa (See footnote 1)? Safety benchmarks will tell you how a system performs on datasets meant to simulate the performance of some general task, often with no particular use case in mind. Someone making a deployment decision about a system needs to know what hazards are likely to emerge when that system is applied to a set of specific tasks in an operating context. These are entirely separate questions, and it is often unclear how data on benchmarks might help someone who needs to reason about deploying a model. There is a substantial gap between model documentation and model safety decisions.

Most recently, this gap has challenged competition design for the Generative Red Team 2 (GRT2) at DEF CON 32 in August. GRT2's goal is to explore the intersection between the security and LLM safety communities. These two groups of practitioners do not speak the same language and have struggled to find common ground. The security community thinks in terms of "flags" that can be captured, such as breaking into systems, or producing undesirable system behaviors. These attack "vulnerabilities" can be reported to companies through a responsible disclosure process that took decades to develop between companies, hackers, and governments. Ideally, LLM safety could learn from these established practices. Unfortunately, the vulnerability modality is an imperfect fit to the safety problems exhibited by LLMs. A state of the art LLM correctly refusing to answer 99 percent of prompts that would generate a toxic response will still produce billions of instances of toxic content. Each prompt that yields toxic output may be considered a vulnerability. A system operator that is obliged to disclose vulnerabilities might be required to separately report billions of prompts, even if they all share an underlying cause. As a result, policy makers are considering adopting a new term of art for the probabilistic failures of systems like LLMs: enter the "flaw."

A flaw is, "...any unexpected model behavior that is outside of the defined intent and scope of the model design." A new flaw may require changes to the model documentation even if it doesn’t require updating the model itself. However, as defined, LLMs cannot be flawed because their model cards state properties without stating system intent and scope.

Without guidance on intent and scope, users become responsible for determining how to use the general purpose system. If the system is somehow flawed for a particular use case, then that use case exists in the mind of the user and there is nothing to report against. With GRT2, DSRI and Ai2 are bringing “intent” to model cards to enable flaw reporting. We are adding the missing section of model cards: documentation of the use cases.

The model card will be battle tested by DEF CON attendees who will look for all the ways in which WildGuard+the Open Language Model (OLMo) may be flawed and present hazards to their users. The model card will be a dynamic artifact helping the world better understand where OLMo might be fit for purpose and where additional research engineering is required.

Ai2’s participation in GRT2 is to be commended for its open approach to safety. The security community will have the capacity to uncover a great many flaws that will challenge researchers and engineers over the decades to come, and the world will have an example of how LLM vendors can interface with the broader public eager to probe the safe operation of LLM systems.

We encourage everyone to join us in stress-testing OLMo and exploring the design space of "flaw reports." Please check back as we post or link to the GRT2's OLMo model card with specific claims and a collection of flaw reports serving as examples for GRT2.

Acknowledgements

We are building on the conversations and work of many people and institutions, including the researchers of Ai2 and the Digital Safety Research Institute, GRT2 co-organizers including and especially Sven Cattell, and several policy makers now considering operationalization of the “flaw report.”

Footnotes

(1) When it comes to large language models, there are too many uses to count. To select a focus for use cases and specific tasks, DSRI advocates a utilitarian approach based on how likely people are to use a model for a specific use case paired with the likelihood and severity of harm in that use case. The goal is to build an evidence-based argument about whether (or not) a system is suitable for a given purpose, until a “whitelist” of claimed uses and a “blacklist” of disclaimed uses covers most or all of a model’s likely uses.