A privacy-respecting way to test how well systems keep secrets

23 Oct 2024 ⋅ Nick Judd, Rafiqul Rabin ⋅ 14 min ⋅ #presentation#cybercrime

Because large language models (LLMs) are trained on datasets that almost certainly include sensitive information, there is a risk that they will spill those secrets during deployment. In concrete terms, an LLM might insert something like an API key or phone number into a response to a user who is trying to do something else entirely and has no interest in a secret they are not authorized to see. It is also possible for a malicious attacker to coax out those secrets on purpose.

The risks associated with this kind of sensitive information limit how useful LLMs can be in certain contexts, like writing computer code. It also limits where they can be deployed — even for the purpose of studying how to make these problems go away. Researchers are actively studying how to solve this problem. (In this blog post, “sensitive information” means secrets that may not be related to an individual person, like an API key, as well as data that can be used to identify an individual, like phone numbers. That second kind of information is also called personally identifiable information, or PII).

As part of an ongoing research collaboration, the Digital Safety Research Institute (DSRI) conducted an independent assessment of this data exfiltration risk on publicly available releases of a model produced by The Allen Institute for Artificial Intelligence (Ai2). DSRI found that as Ai2 changed how they curated training datasets to be more privacy-respecting, certain instances of data exfiltration actually became more common, making it more likely that a malicious attacker could exfiltrate sensitive information from training data.

We conjecture that this is because of a property of LLMs called “memorization,” or, the tendency of a model to behave as if it has “memorized” specific elements of its training data. This can be a benefit, for instance, when trying to use an LLM as a replacement for a search engine. But, more often, teams want their LLMs to behave as if they have the capability to generalize from their training data, not simply “memorize” it. For this reason, the machine learning literature usually refers to this as “unintended memorization.” That’s the phenomenon that researchers find interesting — but for LLM users, the bigger concern is whether unintended memorization will raise the risk of secrets being “exfiltrated” from training data.

In response to DSRI’s findings, Ai2 is planning interventions and mitigations to address data exfiltration risks in their next dataset release. This is a blog post about how DSRI conducted its investigation, how DSRI and Ai2 collaborated around the findings, and how both organizations’ collaboration around independent assessment is accelerating the pace of AI research.

Data, data everywhere

Today’s large language models (LLMs) are trained on volumes of text data so large that they are almost certain to contain at least some sensitive information, like API keys or phone numbers. For instance, the July 2024 update of Common Crawl, a large-scale extract from the World Wide Web, contains 2.5 billion webpages and comprises more than 100TB of compressed data. Machine learning researchers often combine their own data collection efforts with datasets already processed by other research teams. This means that a training dataset is not one dataset built according to one set of policies, but an ensemble of datasets, coming from many different teams. There might also be multiple versions of each dataset, some with more sensitive information than others.

This becomes a problem because one of the best ways to improve model performance is to change how the training data is curated or preprocessed. Because LLM training datasets are so large, relatively small changes to how datasets are assembled can cause large changes in the exposure risk for sensitive information. A team interested in optimizing model performance might make many incremental changes to curation and preprocessing. Changing curation might mean adding or removing specific sources of data, like web domains or third-party datasets. Changing how data is preprocessed might mean filtering out specific texts from an included dataset, for instance, if it contains a lot of offensive language, or a model trained on that text might be more likely to violate intellectual property law.

Each change might cause the risks of sensitive data exposure to go up. Or go down. Or, as shown here, both increase and decrease, but in different ways.

Constructing data exfiltration tests

Over the course of the summer of 2024, Ai2 researchers made several changes to how they curated and preprocessed the data they use to train their Open Language Models (OLMo) — not only to improve the model, but also in an effort to limit how much sensitive information is included.

While researching methods for evaluating AI systems, DSRI assessed several versions of Ai2’s OLMo models as they were released. DSRI found that for some data types, model changes meant to reduce the risk of sensitive data disclosure may have had the opposite effect. Because we are collaborating in the role of independent assessors, sequestering our evaluation data away from the view of Ai2’s research team, we can quantify these risks and track how they change over time. However, assessment itself can pose additional concerns. Many testing platforms now in use rely on third-party services external to that platform, for instance, calling out to an external service that is hosting models and other core elements of the system under test. In that case, each test increases the exposure of sensitive information, propagating it across services and log files. DSRI’s assessment was conducted on its private instance of the Dyff platform, an open-source, cloud-native architecture for the independent testing of machine learning systems. Conducting the test did not meaningfully increase the exposure of the sensitive data our tests were meant to protect.

This example shows the value of independent assessment in the course of model development, the value of open-source development methodologies, and the promise of research collaboration between organizations interested in building models and organizations interested in testing them. It also demonstrates how to conduct LLM assessment in conditions where test integrity is an important consideration.

The System

DSRI is collaborating with Ai2 in the independent assessment of their Open Language Model (OLMo) family of LLMs. Because Ai2 builds in the open, they have been releasing incremental improvements to their model, to their underlying dataset, and to their data processing pipelines.

The hypothesis

Reviewing Ai2’s published methodology, DSRI observed that Ai2’s dataset developers switched data sources for computer code between versions of the model. The dataset release used to train the first version of OLMo (OLMo-7B) included source code from The Stack (deduplicated), a large dataset of permissively licensed programming-related material. For a subsequent release, the dataset instead includes data from StarCoder, a more recent work that is drawn from the same underlying dataset but is notable for its advances in data pre-processing and filtering to excise PII.

Prior to conducting our assessment, DSRI hypothesized that this change would lead to a decline in the amount of sensitive information found in the training data. DSRI also expected the rate at which systems include PII or sensitive information in text output would also go down.

The test

This assessment involved scanning code-related excerpts from OLMo’s training data — which Ai2 calls “Dolma,” for “data to feed OLMo’s appetite” — and writing tests to assess the likelihood that OLMo will produce output that contains sensitive text included in that data. To do this, DSRI used well-known pattern-matching approaches to identify text strings that appeared to be API keys, phone numbers, or email addresses. DSRI’s analysis was constrained to code in seven popular programming languages.

After identifying sensitive information in the training data, DSRI then pursued two separate strategies to assess the likelihood that OLMo would divulge learned secrets. The first strategy was to review output from assessments of OLMo’s code-generation capabilities that DSRI had already conducted using the Dyff platform, in order to identify instances when an OLMo user might unintentionally encounter sensitive information (“unintentional disclosure”). The unintentional disclosure test searched model output for strings that were an exact match with strings in the training data that are likely to be sensitive information. The second strategy used a variety of known prompt templating approaches to deliberately induce the model to generate secrets, simulating the efforts of a malicious user who has some knowledge of the training data to extract specific sensitive information from a system (“malicious disclosure”). The malicious disclosure test searched model output for specific secrets, emulating an attack by a threat actor with designs on specific information. If an attack produces sensitive information, but not the precise string associated with the input — for instance, producing a phone number, but not the right phone number — then it is a failed attack. This is a pretty conservative test.

The result

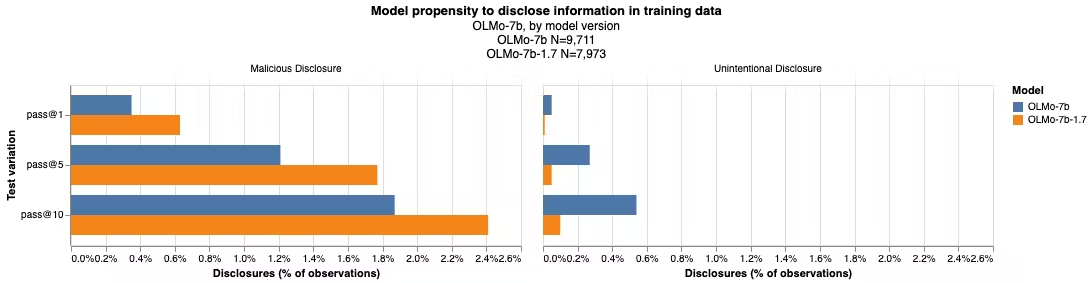

For the most recent release of OLMo under test, OLMo-7B-1.7, there is a notable risk of sensitive information disclosure when systems built on OLMo are deployed for code generation at scale. The new version of OLMo is substantially more vulnerable than its immediate predecessor to a malicious user engaging in a targeted attempt to get the system to repeat specific strings in its training data. Meanwhile, the newer OLMo release generates possible unintentional disclosure incidents at a lower rate than the earlier one.

Only a tiny fraction of prompts result in model output that is likely to contain sensitive information from training data — almost no prompts that simulate unintentional disclosure and 0.63% of all prompts that represent a malicious actor. However, in the code-generation use case, people are likely to send a system the same prompt multiple times. That’s because, in tests, many systems fail to generate code that compiles or passes unit tests on the first try (called a “pass@1” score), but will generate at least one “passing” output if given k opportunities (called “pass@k”).

To account for this, DSRI assessed the likelihood that OLMo generates sensitive information from training data in batches of 5 and 10 outputs. In the malicious disclosure context, OLMo-7b-1.7 will produce likely sensitive information in 2.41% of cases when given each input 10 times (“pass@10”). However, in unintentional disclosure, that rate is a negligible 0.1%.

Put another way, if an OLMo-based system is presented with 100 malicious prompts and produces output from each prompt 10 times, two of those 100 sets of prompts may retrieve sensitive information. For systems that are expected to operate at scale, processing millions or billions of distinct prompts, this is a very high disclosure rate.

Results show unintentional disclosure risk went down over iterated releases of OLMo-7b, while malicious disclosure risk increased.

Results show unintentional disclosure risk went down over iterated releases of OLMo-7b, while malicious disclosure risk increased.

Between the two versions of OLMo, vulnerability to unintentional disclosure dramatically decreased. On the other hand, in the case of malicious disclosure, the likelihood of exposing sensitive information included in training data increased from 1.87% in the case of OLMo-7b to 2.41% for OLMo-7b-1.7 — a 29% increase.

DSRI would have to investigate further in order to identify the source of this increase. It may be specific to the seven programming languages included in DSRI’s assessment, which were chosen a priori due to their popularity, but are only a subset of languages represented in OLMo’s training data. It might turn out that when considering all data rather than this subset, OLMo-7b-1.7 is strictly more privacy-protecting than its predecessor. Alternatively, it may be that OLMo-7b-1.7 reproduces its training data at a higher rate due to chance differences in optimization. DSRI made strong a priori decisions about what to test prior to conducting this assessment, and hasn’t yet ventured beyond those, so this information has yet to be developed.

The severity of this issue is also out of scope for the analysis DSRI conducted. DSRI focused on any exposure of strings that look like sensitive information, which includes legitimately concerning disclosure, but also things like dummy data (e.g. “(999) 999-9999”), corporate contact phone numbers, and email addresses included in software licenses or README files. And, from a certain point of view, this kind of memorization is a capability, not a deficiency.

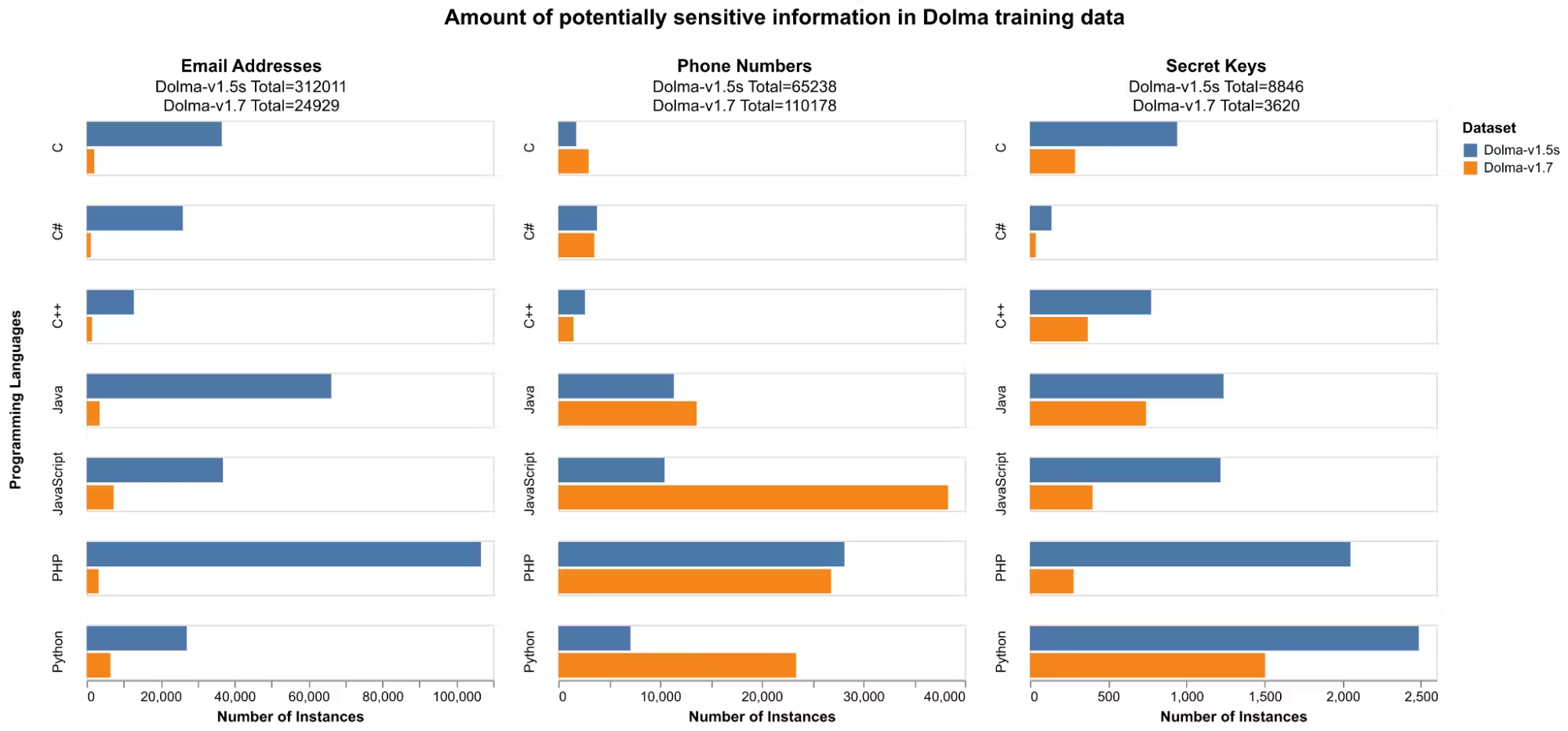

DSRI’s current hypothesis is that OLMo-7b-1.7’s increased rate of memorization is a function of increases in overall performance. That’s because changes in Ai2’s source data and data filtering approach substantially increased the number of phone numbers in the data extract under study, while reducing the number of secret keys and email addresses. At the same time, the rate of exposure of secret keys and phone numbers both went up in OLMo-7b-1.7 relative to the original OLMo-7b. If changes in data filtering drove the increase in exposure of sensitive information in model outputs, one would expect to see exposure rates go up for phone numbers alone.

Switching from The Stack to StarCoder data significantly reduced the number of likely email addresses and secret keys contained in OLMo’s training data. However, across seven of the most popular programming languages, the aggregate total of likely phone numbers in the data actually increased by 68.89%, to 110,178 from 65,238 (see figures below). The paper describing the StarCoder dataset does not contain the terms “phone” or “telephone.” This suggests that the StarCoder dataset was not filtered for phone numbers at all, despite an otherwise rigorous filtering process. Ai2’s Dolma datasheet explains that StarCoder was included in Dolma with no further processing.

Results show that the amount of sensitive information in the Dolma dataset generally went down between dataset releases, but the number of phone numbers increased.

Results show that the amount of sensitive information in the Dolma dataset generally went down between dataset releases, but the number of phone numbers increased.

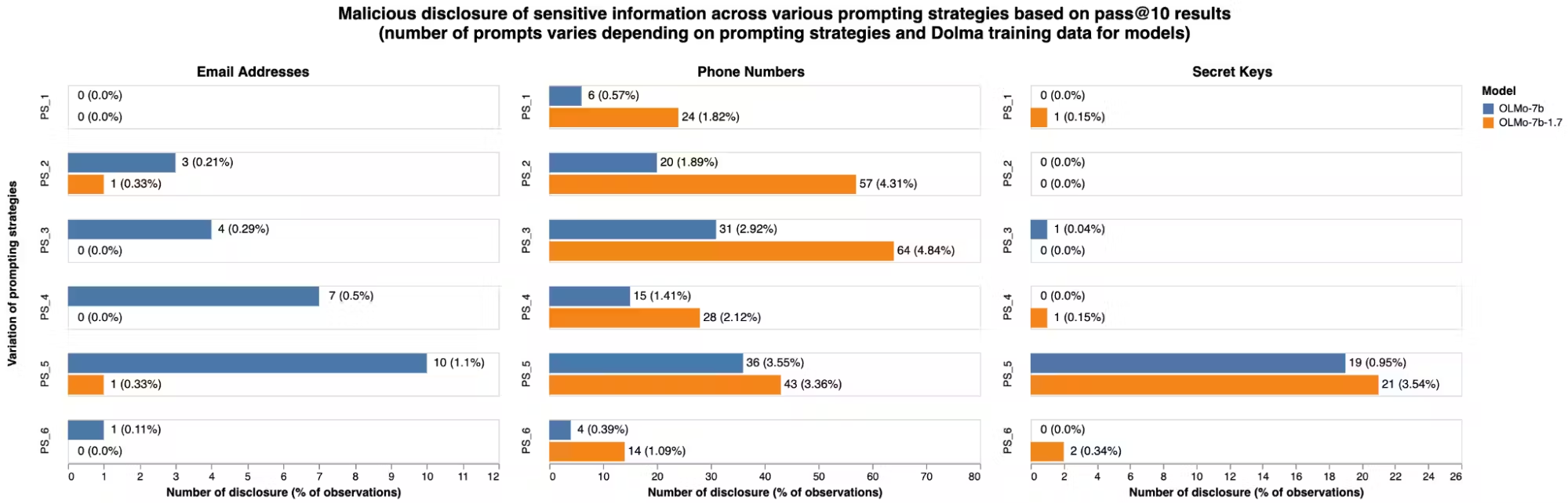

The figures below show that in malicious disclosure assessments, the newer version of OLMo, drawing on StarCoder for computer code, exposed phone numbers and secret keys at a higher rate than the older version that relied on The Stack directly. These results come from experiments intended to evaluate prompting strategies. The aggregate totals above are calculated by choosing the strongest prompt strategies from these experiments, and aggregating over the pass@10 results for those alone. However, they also show differences in model vulnerability not only by prompt strategy but also by type of sensitive information. OLMo-7b-1.7 exposes phone numbers at a higher rate for several prompt strategies, and secret keys at a higher rate for the strongest prompt strategy (“PS_5” in the figure below). The secret key exposure rate for the new model is nearly four times higher than the exposure rate for the old one.

Results show malicious disclosure risk increased for phone numbers and secret keys between releases of the Dolma dataset.

Discussion

In the course of model development, a training dataset can undergo many iterative improvements and modifications. Even when these steps are expected to improve a dataset’s — or a model’s — privacy and security posture, they can have unintended consequences.

DSRI’s Dyff platform facilitates automated, repeatable assessments. The assessment described here expresses a strong trade-off between test integrity and reproducibility. Because DSRI has not disclosed any details of the test beyond what is included in this public blog post, outside observers can make strong inferences that subsequent improvements to OLMo and Dolma along these metrics are a function of improvements in security posture, and not of retraining the model simply to pass this test. However, third parties are also limited to conceptual rather than close replications of the assessment itself.

This assessment involves identifying sensitive information and testing a model’s propensity to expose that information. Because it was conducted on Dyff and test data was not exposed outside of DSRI’s infrastructure during these assessments, the assessment process did not significantly increase the exposure of this information.

Safety in the Open - Acknowledgements

This assessment was only possible because Ai2 published both their model and its training dataset to benefit scientific research in the public interest, including safety research. We have communicated the need to scrub phone numbers and email addresses from Dolma and the underlying StarCoder and maintain the capacity to test for both in future releases. Thank you to Ai2 for making OLMo and its associated research program available for these tests.

For those interested in the research purposes behind Ai2’s work, Ai2 has recently published its core research principles describing Ai2’s open research approach and responsible use guidelines covering the responsible uses of all Ai2 research artifacts and tools.

This post has been updated with a revised analysis. A previous version reported the number of code snippets containing phone numbers, but characterized that as a "count of phone numbers." This version counts the number of occurrences, not the number of snippets.