Testing DeepSeek-R1

12 Jun 2025 ⋅ Jesse Hostetler ⋅ 15 min ⋅ #deepseek

At ULRI’s Digital Safety Research Institute (DSRI), we’re studying how to evaluate the risks posed to the public by modern AI technologies. Few AI systems have generated as much hype recently as DeepSeek’s DeepSeek-R1 model, which is one of the most capable open-weights models currently available. We were eager to get our hands on this model to see how its performance compares to other models we’ve tested.

Of course, these days, “highly capable” also means “very big” and therefore “expensive”. It’s important for anyone deploying these large models to make sure they’re getting the best possible performance for their cloud computing spending. Running DeepSeek-R1 in a minimal hardware configuration costs about $80/hour in our setup. In our first “see if it works” experiment, starting up the model would have taken several hours and cost hundreds of dollars before we even made the first inference (we quickly terminated that first attempt). Once it was running, we got generation performance of 26 output tokens / second, which is $854 / 1M tokens. For reference, OpenAI’s largest model bills $60 / 1M tokens as of 2025/02/24. After optimizing for startup time and concurrent request handling, we reduced startup cost to about $26 (~20 minutes), and inference cost to $22 / 1M tokens, which is 38x cheaper than our first attempt. Optimizations matter!

After reading this article, you’ll understand how to deploy DeepSeek-R1 on Kubernetes and the Google Cloud Platform, and you should have an idea of what you might need to change if you’re using a different cloud provider. You’ll also understand how to optimize the deployment to reduce costs for the offline batch-mode testing use case.

In future posts, we’ll present the results of the testing we’re now conducting on DeepSeek-R1 and other LLMs.

The problem

DeepSeek-R1 is about 700GB in size. With state-of-the-art GPUs like the Nvidia H100 having 80GB of memory each, and a typical cloud computing node having 8x H100s attached, this means we need to deploy the model in a multi-node configuration. While it’s never been easier to set something like this up, we do have a few things to keep in mind:

- All of the compute nodes need access to the model weights, so we need to store them in a way that supports multiple readers

- We need to set up the inter-node communication channels that allow data to flow between the nodes efficiently

- It’s expensive to run models this big, so we need to minimize startup time and maximize throughput

Deploying these models for testing is also a bit different from deploying them as “chat bot” services. We’re interested in running high-integrity, reproducible, batch-mode tests, and this use case leads to different priorities:

- We want to run a known version of the model offline without access to the Internet, which means we need to download and store the weights separately, rather than relying on on-demand downloading from the HuggingFace Hub as you’ll see in most tutorials.

- Our workloads involve starting a model, running some tests, and then shutting it down. As such, fixed costs from a slow start-up time could become significant quickly.

- Once the model is running, we want to maximize throughput (requests per second), but we don’t really care about interactive service metrics like time-to-first-token or request latency.

DSRI’s infrastructure

We implement our AI system tests within the Dyff Platform, which is an open-source Kubernetes-based platform we are developing to lower the barriers to entry for secure and reproducible AI assessment. We host our Dyff instance on the Google Cloud Platform (GCP) using GKE Autopilot. While Dyff can run entirely on Kubernetes with no additional cloud platform dependencies, in our production deployment we take advantage of some GCP features such as Google Cloud Storage. (By the way, the Terraform configuration for our production deployment is open source, too!)

While you’re certainly welcome to use Dyff to deploy your own models, in this post we’ll focus on the underlying Kubernetes and cloud platform resources that are required. In Dyff, these are managed via Kubernetes custom resources implemented in the Dyff Kubernetes operator.

Model storage

We’ve been through a few iterations on the best way to store ML models for serving. First, we stored them on Kubernetes PersistentVolumes that were compatible with the ReadOnlyMany (“rox”) access mode, which allows multiple readers. However, besides rox volumes being finicky to create, persistent volumes have the major drawback of being tied to an availability zone. This means that you can only run the ML model in the same zone, and you have to wait for GPUs to be available in that zone. They’re also roughly 2x the cost of storing the same data in object storage (that is, s3-like storage buckets).

Next, we tried storing the models in object storage and adding an initial “download” step to the model-running workflow, which would download the model files from storage onto a temporary PVC. This avoids creating a special rox volume, because each node can have its own copy, but it has the drawback that we have to download the weights in their entirety before we start loading them onto the GPUs. If the download step runs as an init container, we pay for the GPUs during the download step. If the download step runs in a separate Pod, we don’t pay for the GPUs, but then the PVC is again tied to an availability zone that may not have GPUs available.

Finally, we settled on using the FUSE CSI driver offered by GCP. This is a Kubernetes extension that makes object storage look like a filesystem that’s mounted in the Pod. This is the Right Way to do things. Model-loading involves sequential reads of large files, which means that there’s no real performance penalty for pretending you’re reading from a file system. It’s also inherently multi-reader compatible because the files are pulled dynamically in response to read operations, and it’s faster because the weights are loaded directly onto the GPU rather than having an intermediate step of writing them to a temporary volume.

Note that similar functionality seems to be available for AWS and Azure.

Using HuggingFace models offline

For security and test data integrity, we download the models we will test and store them within our Dyff instance, and then we run the models we’re testing without access to the Internet. Making HuggingFace (HF) models work in this “offline” environment has proven to be surprisingly difficult. And it seems like not many people are doing this, because there isn’t much information on the Web about how to do it. The summary is:

- Use huggingface_hub.snapshot_download() to fetch the model.

- Set all of the many HuggingFace env variables and flags that control where the HF cache is located to the directory you want to download into.

- Use transformers.utils.hub.move_cache(directory, directory) to “migrate” the HF cache version by “moving” it to the same location.

- Note that this is a workaround for an issue that may or may not still be present in newer versions of transformers.

- Deal with the presence of symbolic links in the HF cache, which is problematic because symlinks don’t exist in object storage systems. If using FUSE, you must download into a FUSE-mounted volume so that the FUSE driver can use its internal mechanisms to imitate symlinks. Prior to using FUSE, we walked the cache directory tree to discover the symlinks, stored them in a text file, and then re-created them when downloading the model to a real filesystem.

Tuning the FUSE driver

DeepSeek-R1 is big, and big things are expensive. We estimate it costs us about $80/hour to run the model in a configuration with 16x H100 GPUs. Notably, we pay for the GPUs while we’re loading the model onto them, and we load a new model instance for every test suite that we run, so we want loading to be fast.

The FUSE driver is essentially a proxy server for the object storage provider with a cache attached. It runs in a sidecar container in the Pod that’s using FUSE. It’s very important to allocate ample resources to this container. We use these options in our FUSE configuration:

- 2 vCPU per GPU

- 8Gi ephemeral storage per GPU

- 8Gi memory per GPU

- Mount options:

- file-cache:max-size-mb = 1/2 of allocated ephemeral storage

- enable-parallel-downloads = true

Note that these are educated guesses, and we err on the high side of resource allocation because on GCP, you pay for all of the CPUs and memory on a GPU node whether you use them or not. Enabling parallel downloads is critical, and this requires that a file cache is allocated. In this configuration, we get transfer speeds of 500-600 MB/s when loading the model, which keeps our billed start-up time for DeepSeek-R1 to around 20 minutes typically. (We could probably make this even faster).

For security, we run the model as an ordinary user, so we also need to set the uid and gid for the mount. We also mount only the sub-directory containing the model we need using the only-dir option.

Multi-node inference

We use the excellent vLLM system to run LLMs like DeepSeek-R1. vLLM comes with multi-node capabilities via Ray. Ray uses a “leader-worker” topology where there’s a single special “leader” process and multiple “worker” processes. In Kubernetes, when we need multiple instances of a Pod that have stable identities, we use a StatefulSet to deploy them. Here’s a StatefulSet spec created by the Dyff k8s operator for DeepSeek-R1, in most of its gory details:

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

dyff.io/id: 3f8463ad69f74bb9b1114649598448d4

name: e3f8463ad69f74bb9b1114649598448d4i

namespace: workflows

spec:

podManagementPolicy: Parallel

replicas: 2

selector:

matchLabels:

dyff.io/id: 3f8463ad69f74bb9b1114649598448d4

serviceName: e3f8463ad69f74bb9b1114649598448d4i-nodes

template:

metadata:

annotations:

gke-gcsfuse/cpu-limit: "16"

gke-gcsfuse/cpu-request: "16"

gke-gcsfuse/ephemeral-storage-limit: 72Gi

gke-gcsfuse/ephemeral-storage-request: 72Gi

gke-gcsfuse/memory-limit: 72Gi

gke-gcsfuse/memory-request: 72Gi

gke-gcsfuse/volumes: "true"

labels:

dyff.io/id: 3f8463ad69f74bb9b1114649598448d4

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: cloud.google.com/gke-accelerator

operator: In

values:

- nvidia-h100-80gb

- key: cloud.google.com/gke-spot

operator: In

values:

- "true"

containers:

- args:

- --model

- /dyff/mnt/model/models--deepseek-ai--deepseek-r1/snapshots/f7361cd9ff99396dbf6bd644ad846015e59ed4fc

- --download-dir

- /dyff/mnt/model

- --max-model-len

- "16384"

- --trust-remote-code

- --tensor-parallel-size

- "8"

- --pipeline-parallel-size

- "2"

- --served-model-name

- d5f3920286c64a3e870beb84183721e8

- deepseek-ai/deepseek-r1

env:

- name: HF_DATASETS_OFFLINE

value: "1"

- name: HF_HOME

value: /dyff/mnt/model

- name: HUGGINGFACE_HUB_CACHE

value: /dyff/mnt/model

- name: TRANSFORMERS_CACHE

value: /dyff/mnt/model

- name: TRANSFORMERS_OFFLINE

value: "1"

- name: HF_MODULES_CACHE

value: /tmp/hf_modules

- name: HOME

value: /tmp

- name: TORCHINDUCTOR_CACHE_DIR

value: /tmp/torchinductor

- name: DYFF_INFERENCESESSIONS__MULTI_NODE

value: "1"

- name: DYFF_INFERENCESESSIONS__POD_INDEX

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.labels['apps.kubernetes.io/pod-index']

- name: DYFF_INFERENCESESSIONS__LEADER_HOST

value: e3f8463ad69f74bb9b1114649598448d4i-0.e3f8463ad69f74bb9b1114649598448d4i-nodes.workflows.svc.cluster.local

image: registry.gitlab.com/dyff/workflows/vllm-runner@sha256:fbf5a36f42450b6617950bc787b7f2bc38277324d456fff41cf36f87f941a5fc

name: e3f8463ad69f74bb9b1114649598448d4i

ports:

- containerPort: 8000

protocol: TCP

resources:

limits:

nvidia.com/gpu: "8"

requests:

cpu: "190"

memory: 1723Gi

nvidia.com/gpu: "8"

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsGroup: 1001

runAsNonRoot: true

runAsUser: 1001

seccompProfile:

type: RuntimeDefault

volumeMounts:

- mountPath: /tmp

name: tmp

- mountPath: /dyff/mnt/model

name: model

readOnly: true

- mountPath: /dev/shm

name: shm

nodeSelector:

cloud.google.com/gke-accelerator-count: "8"

restartPolicy: Always

securityContext:

fsGroup: 1001

seccompProfile:

type: RuntimeDefault

serviceAccountName: inferencesession-runner

volumes:

- ephemeral:

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 720Gi

volumeMode: Filesystem

name: tmp

- csi:

driver: gcsfuse.csi.storage.gke.io

readOnly: true

volumeAttributes:

bucketName: dyff-models-6rgnsiyc

mountOptions: implicit-dirs,only-dir=d5f3920286c64a3e870beb84183721e8,gid=1001,uid=1001,file-cache:max-size-mb:65536,file-cache:enable-parallel-downloads:true

name: model

- emptyDir:

medium: Memory

sizeLimit: 402654M

name: shm

Configuring the model runner

There’s obviously a lot going on here, some of which is specific to the Dyff Platform. This is the part that tells the model runner to use a multi-node configuration:

spec:

template:

spec:

...

- env:

...

- name: DYFF_INFERENCESESSIONS__MULTI_NODE

value: "1"

- name: DYFF_INFERENCESESSIONS__POD_INDEX

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.labels['apps.kubernetes.io/pod-index']

- name: DYFF_INFERENCESESSIONS__LEADER_HOST

value: e3f8463ad69f74bb9b1114649598448d4i-0.e3f8463ad69f74bb9b1114649598448d4i-nodes.workflows.svc.cluster.local

These three environment variables are used by our model runner’s entrypoint script to set up the Ray cluster:

- DYFF_INFERENCESESSIONS__MULTI_NODE indicates that we want a multi-node configuration

- DYFF_INFERENCESESSIONS__POD_INDEX makes the index of the current Pod in the StatefulSet available

- DYFF_INFERENCESESSIONS__LEADER_HOST is the DNS name of the Pod that will run the Ray “leader” process. The format is

. . .svc.cluster.local. The service-name here is that of the “headless” Service that is associated with the StatefulSet.

Now, we build a Docker image based on the vLLM image with a different entrypoint script:

#!/bin/bash

# SPDX-FileCopyrightText: 2024 UL Research Institutes

# SPDX-License-Identifier: Apache-2.0

if [[ -z "${DYFF_INFERENCESESSIONS__MULTI_NODE}" ]]; then

exec python3 -m vllm.entrypoints.openai.api_server --host "0.0.0.0" --port "8000" "$@"

else

if [[ -z "${DYFF_INFERENCESESSIONS__POD_INDEX}" ]]; then

exit "DYFF_INFERENCESESSIONS__POD_INDEX not set"

fi

ray_port="6739"

if [[ "${DYFF_INFERENCESESSIONS__POD_INDEX}" == "0" ]]; then

# This is the "leader" pod

ray start --head --port="${ray_port}"

# This blocks forever

python3 -m vllm.entrypoints.openai.api_server --host "0.0.0.0" --port "8000" "$@"

else

# This is a "worker" pod

if [[ -z "${DYFF_INFERENCESESSIONS__LEADER_HOST}" ]]; then

exit "DYFF_INFERENCESESSIONS__LEADER_HOST not set"

fi

# --block keeps the process alive

ray start --block --address="${DYFF_INFERENCESESSIONS__LEADER_HOST}:${ray_port}"

fi

fi

If the current Pod is the “leader” (the Pod with index 0), we run ray start --head (“head” means “leader”), and then start the vLLM OpenAI API server. If the current Pod is a “worker”, we run ray start --address= to connect to the “leader”, and use the --block flag to keep the process alive forever.

Then, we just have to run vLLM with the appropriate options to match our hardware configuration:

spec:

template:

spec:

...

- args:

- --model

- /dyff/mnt/model/models--deepseek-ai--deepseek-r1/snapshots/f7361cd9ff99396dbf6bd644ad846015e59ed4fc

- --download-dir

- /dyff/mnt/model

- --max-model-len

- "16384"

- --trust-remote-code

- --tensor-parallel-size

- "8"

- --pipeline-parallel-size

- "2"

- --served-model-name

- d5f3920286c64a3e870beb84183721e8

- deepseek-ai/deepseek-r1

vLLM’s suggested multinode configuration is to set --tensor-parallel-size to the number of GPUs on each node, and --pipeline-parallel-size to the number of nodes.

The --max-model-len flag sets the model’s context length. We don’t need the full >200k context length that DeepSeek-R1 supports for our tests, so we reduce it to allow vLLM to allocate memory more effectively.

Exposing the model for inference requests

Finally, we create a Service pointed at the Pod that’s running the vLLM server so that it’s exposed for incoming inference requests. (Note that this is a different Service from the headless Service that is required by the StatefulSet):

apiVersion: v1

kind: Service

metadata:

labels:

dyff.io/id: 3f8463ad69f74bb9b1114649598448d4

name: e3f8463ad69f74bb9b1114649598448d4i

namespace: workflows

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8000

selector:

dyff.io/id: 3f8463ad69f74bb9b1114649598448d4

statefulset.kubernetes.io/pod-name: e3f8463ad69f74bb9b1114649598448d4i-0

type: ClusterIP

Notice how the .selector uses the statefulset.kubernetes.io/pod-name label to identify the “leader” Pod. This label is set automatically by the StatefulSet resource controller.

Resource configurations

There are a few other things to point out in the spec:

- We configured the FUSE driver using GKE-specific annotations in the Pod template spec.

- We requested nodes with the required GPU hardware using more annotations and node affinity settings, as well as requesting preemptible “spot” instances, which are much cheaper.

- We allocated 30% of available memory as shared memory mounted at /dev/shm, as vLLM requires for multi-GPU configurations.

- We created a large ephemeral volume mounted at /tmp that the vLLM framework and its dependencies can use as cache space. We also configured these libraries to use /tmp as their cache using various environment variables. By default, they attempt to create files in the user’s home directory, which doesn’t exist in our Docker container.

- We set podManagementPolicy: Parallel, which reduces startup time and cost by causing the nodes to start (and load the model) concurrently rather than sequentially.

We determine the resource requests based on the GPU count and the maximums for GKE Autopilot GPU Pods. GKE uses node-based billing for GPU nodes. This means we always pay for the whole node, so for simplicity, we just request all of the available resources: we start with the maximum requests, reserve some for DaemonSets as described in the GKE docs, reserve some more for the FUSE sidecar container, and allocate the rest to the model runner container.

Inference performance profiling

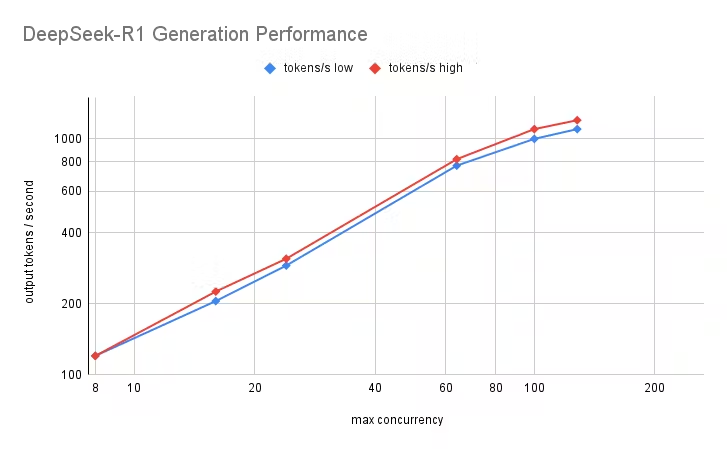

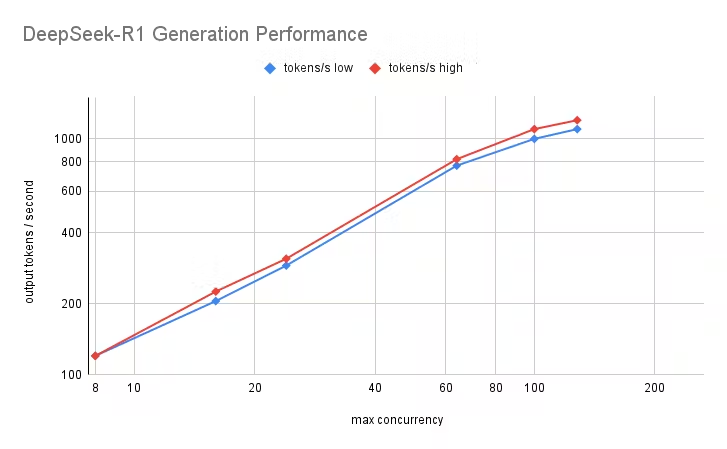

We’re mainly interested in running these models in “batch mode” so that we can feed an existing dataset through them as quickly as possible. To do this, we make many concurrent requests to the model server, taking advantage of vLLM’s adaptive batching capability. Unfortunately, it’s possible to trigger CUDA out-of-memory errors if the number of concurrent requests is too high. We profiled the model with representative data to determine the maximum number of requests that it could process reliably, and these results are shown in the following chart:

Concurrent requests vs. output token generation rate. The inference service raises a CUDA out-of-memory error somewhere between 128 and 160 concurrent requests.

Concurrent requests vs. output token generation rate. The inference service raises a CUDA out-of-memory error somewhere between 128 and 160 concurrent requests.

The system exhibits linear scaling up to about 100 concurrent requests, and it raises a CUDA out-of-memory error somewhere between 128 and 160 concurrent requests. After profiling, we set the --max-num-seq flag to prevent vLLM from exceeding this limit.

Wrap-up and additional resources

While the process of deploying a large LLM like DeepSeek-R1 is intimidating, it’s amazingly accessible once you understand what needs to be done. Most of the credit for this goes to the tremendous open-source ecosystem that has grown around AI technology, and cloud computing in general, over the last decade. That includes projects like HuggingFace, which somehow allows anyone to download 700GB of model weights for free; vLLM and Ray, which together let you create multi-node multi-GPU deployments with a few command line options; and Kubernetes itself, which makes all the infrastructure cloud-agnostic and portable. I was around for the creation of AlexNet, when running a modest CNN on normal-sized JPEGs required writing custom CUDA kernels. Things are much easier now! One thing we didn’t address was creating replicas of the entire multi-node setup to increase throughput. This is not straightforward to do with standard Kubernetes resources, because usually we would use a Deployment to create identical replicas of something, but we can’t have a Deployment of StatefulSets. One solution is to install custom k8s resources such as LeaderWorkerSet that implement this missing functionality. This tutorial by Google goes into more details about deploying a model in this way.

Meanwhile, at DSRI, we’re already using our DeepSeek-R1 deployment on Dyff to study how its performance compares to that of other open-weights models in use-cases that affect you every day. We’ll have more to say about those results in future articles.